Goal cycle environments

I’ve been thinking about ways to construct challenging gridworld scenarios in which the behavior of expert agents might provide cues that ease learning for novice agents. I’ve focused on tasks in which navigation is central, since the an agent’s movement can always in principle be visible to other agents. Tasks like this often resemble random maze navigation: an agent spawns in an environment with a random layout and receives a reward after navigating to a certain (perhaps randomly placed) goal tile. The episode ends either after the agent reaches the goal, or after a fixed time limit (with the agent respawning each time it reaches the goal).

In that sort of environment, the main skill exhibited by expert agents is efficiently searching a map (not running in to walls, not retracing steps, etc). Non-expert agents don’t stand to learn all that much by observing the experts.

I developed a class of environments called goal cycle that I’ve been using to study hard-exploration tasks in both single and multi-agent scenarios.

Goal cycle environments

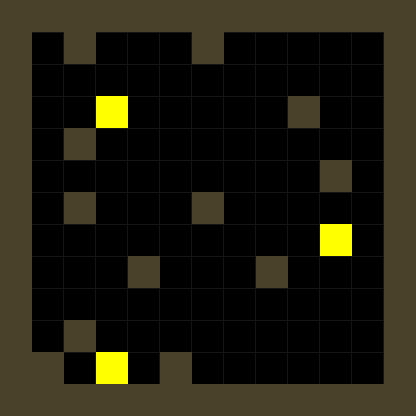

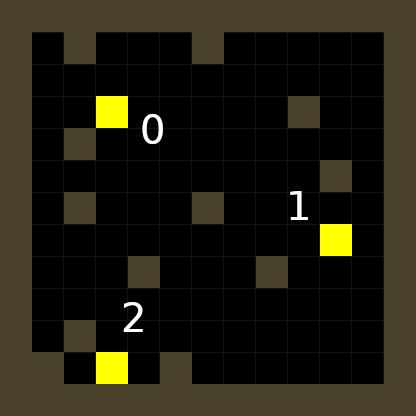

The grid in the example below has size 13x13 and contains 3 goals and 11 pieces of clutter. The goals and clutter are placed randomly. Agents can step on and see through the goal tiles and other agents, but they cannot step on or see through wall or clutter tiles.

Each goal tile has an id between 0 and 2 (if there are 3 goals, otherwise between 0 and N-1). Agents are rewarded the first time they step on a goal in each episode. They are also rewarded any time they navigate from goal $x$ to goal $(x+1)\%N$. Agents are penalized for stepping on a goal tile out of order and the size of this penalty is configurable.

While the goal identities are visually indistinguishable to the agents, the Marlgrid goal cycle environments allow agents to directly see the (scalar) rewards dispensed by the environments as part of their observations. I’ve also added support for a visual prestige mechanism that causes agents to change color in response to rewards or penalties (in their own egocentric views as well as those of other agents).

The agent shown above was trained with PPO-LSTM. The whole map is shown on the left, but the agent only sees the partial view shown on the right. This agent was trained from images and didn’t receive any positional/directional/reward information directly from the environment. With prestige coloring, the agent starts off red and becomes somewhat bluer with each positive reward. Penalties (negative rewards) reset the agent’s color to red. In this episode, the agent traverses the goals in the order [0, 1, 2, 0, 1, 2, 0, 1, 2, 0, 1, 2, 0, 1, 2, 0] and happens not to make any mistakes.

Hard exploration

When the penalty is large the reward signal becomes deceptive and goal cycle becomes a hard exploration problem (complete with sparse and delayed rewards). Some of the well-known environments that researchers have used to measure progress in this challenging problem domain include the Atari game Montezuma’s Revenge and the door/key and multi-room gridworld environments. The difficulty of exploration varies with the size of the penalty, and it’s

Agents incur penalties for exploring possible sequences, so novice agents tend to learn to avoid the goal tiles:

When the penalty is large, an agent can obtain a maximal return in an episode by cycling between the three goals in a certain order (when the penalty is large). The agent must first identify the correct order. Even optimal policies might incur penalties before discovering the corect order, since the bonus tiles can’t be disambiguated without stepping on them. In a three-goal environment, this would happen half the time. Identifying the proper order constitutes a hard exploration problem that must be overcome in each episode.

Environments with higher a penalty $p$ for messing up the goal orders are more difficult to explore.